Cyberattacks and AI Misinformation: Market and Economic Fallout

A major cyberattack is a tail risk, while a huge AI misinformation crisis is a modest crisis in our view. Russia/China and Iran are less likely to launch a state sponsored cyberattack for geopolitical reasons and also uncertainty over president elect Donald Trump’s response. A huge AI misinformation crisis could cause financial market problems if it impacts payment systems and undermines tech and economic confidence, though central banks response would be large scale liquidity injections. Any major crisis would likely also be a risk off event in financial markets.

A Major cyberattack or alternatively a huge AI misinformation crisis are tail to modest risk alternative scenarios in 2025. What would be the market and economic fallout?

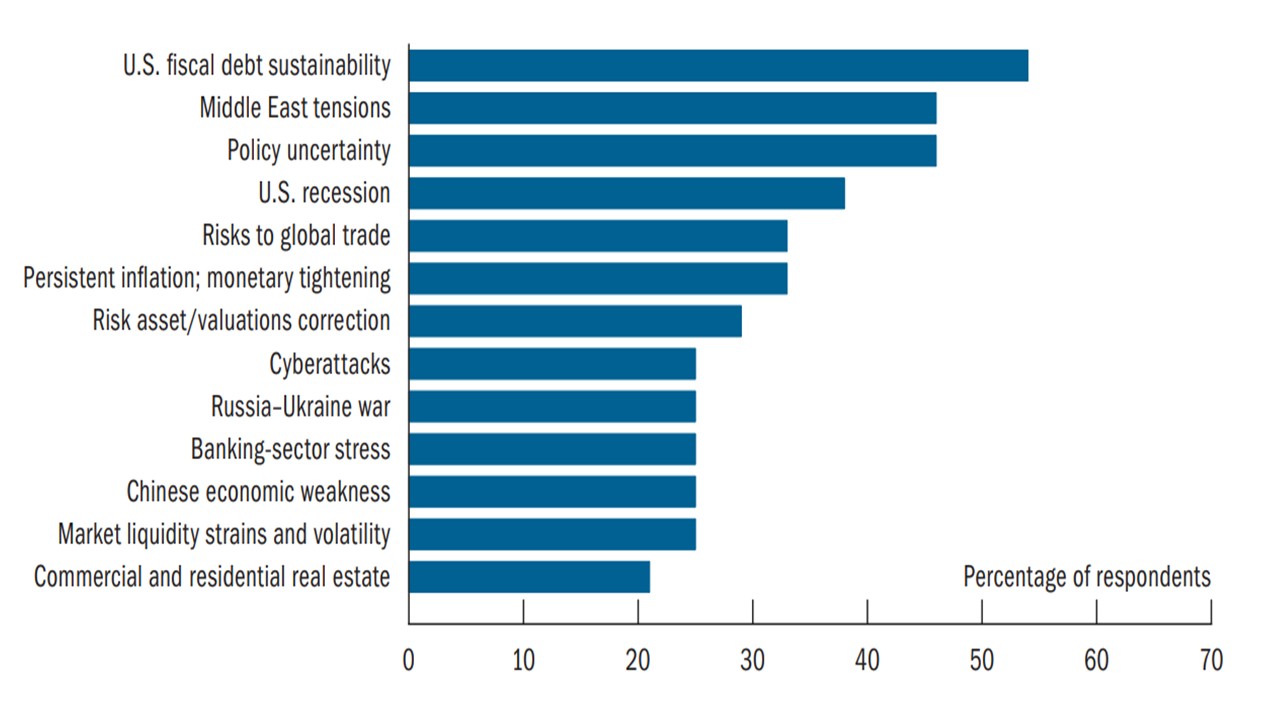

Figure 1: Most Cited Potential Shocks Over Next 12-18 months

Source: Federal Reserve Financial Stability Report Nov 2024 (NY Fed Survey)

Starting first with a major cyberattack it is likely that the odds of a state sponsored attack by a large country could be lower in 2025 than 2024. Russia is moving towards a negotiating phase on the Ukraine war and a major cyberattack on Ukraine or EU countries would undermine Putin’s ability to achieve most of what he wants to achieve with president elect Donald Trump. China is not considering an invasion of Taiwan (large scale cyberattacks can come before invasions e.g. Ukraine in 2022), while China will want to avoid upsetting a fluid relationship with the U.S. threatening a trade war. Finally, Iran would likely fear that a major cyberattack would lead to retaliation by Israel and maximum economic pressure from U.S. president Trump. The risks of a physical attack on major infrastructure (e.g. power grids) seems less likely in 2025 than 2024. This does not stop a mid-level cyberattack such as that reported to be by Chinese hackers on the U.S. Treasury (here), which appeared to steal documents rather than vicious malware to semi permanently cripples systems.

A major cyberattack by criminal organisations is possible for ransom via malware threats, but this would likely be institution specific to extract payments rather than against core banking or financial infrastructure that could have a wide systemic adverse effect. It is also difficult for criminal organisations to leap from mid-level to major cyberattack capabilities. The economic effect of a major and prolonged cyberattack against one company is likely small, though the market effects on that company could extend wider if it is an interconnected bank that causes payment disruption. The November 2024 Federal Reserve financial stability report (Figure 1) highlighted that this risk is on their radar. Even so, the Fed admits the effect of a major cyberattack could be amplified by existing vulnerabilities in the U.S. financial system—for example, by triggering funding runs or asset fire sales.

The 2 alternative is that a huge AI misinformation crisis causes distrust in an economy or a financial system. Individuals have a certain tolerance for misinformation, which means that moderate level AI disinformation has little last economic effect (e.g. Apple recent problems with AI generated misinformed news). The key elections in 2024 do not appear to have been severely impacted by AI misinformation, with moderate misinformation not having an overall impact. However, large language models for AI training are known to be vulnerable to malicious users (via prompt injection risks). A huge AI misinformation crisis (accidental or caused by a malicious actor) that shakes confidence in a key financial institution could risk problems. Even so, though some worry that the Trump administration style and focus on slimming down regulation would undermine crisis management, the Fed and other central banks have many tools for liquidity support and market stabilisation as was seen in the GFC and 2020 covid crisis. Tech companies share prices could also be hurt by a huge AI misinformation campaign, if it produces concerns over revenue and earnings prospects from AI related applications that are the current key focus of the multi-year AI wave.

A major cyberattack is a tail risk, while a huge AI misinformation crisis is a modest crisis in our view in the next 12-18 months.